There are two kinds of people who work in PPC – the strategists and the analysts.

Strategists are those who have “light-bulb” moments; they come up with a great idea for their account and roll with it. They are confident in what they’re implementing and their decisions are based on intuitional logic:

“The weather is cold today and nobody will want to buy my client’s sunscreen today. I’m going to reduce my max CPC bids by 20%!”

This mindset is beneficial in term of forecasting changes and reacting quickly, but the decisions are based largely on instinct and can be quite risky in terms of impacting performance.

Analysts, on the other hand, tend not to act unless they have direct proof of a correlation. They base all their decisions on what data is telling them, which is far safer, but they are nervous about making any changes that could have a negative effect. As a result, they tend to shy away from implementing innovative ideas (Akin Laguda, 2016)

Although both strategists and analysts make great PPC employees, they each have their limitations. However, do not fear! AdWords has taken steps to allow us to implement large changes within our accounts without the associated risk to performance through their “Drafts & Experiments” feature, thus satisfying both mindsets whilst eliminating their flaws.

What are Adwords Experiments?

As someone who tends towards the “Analyst” mindset, myself, I believe that all decisions should be based, to some extent, on data. However, I appreciate that sometimes it’s necessary to make take risks to achieve success.

But there is such a thing as calculated risks…

Through using the “Drafts & Experiments” feature within AdWords, you can test major changes to your campaigns on only a small portion of your traffic (e.g. 25%). Once your experiment has finished or has been allowed to run for a significant period of time, you can compare your experimental data with the data from your original settings and thus see if the changes have had a positive or negative impact on performance. At this point, you can then decide whether to roll out the changes across the entire account or scrap the changes altogether.

What are the benefits?

- Reduces risk as you can test theories without committing your whole account to the changes.

- The test environment is far more controlled as confounding variables* will not have an effect on the results compared to if you tested a theory on your whole account over a few weeks and then measured your results against the previous time period.

*Confounding variables are aspects of an experiment that cannot be kept constant (e.g. the weather, national holidays, competitor activity, recent news related to product/service).

When can you use Adwords Experiments?

You can use Adwords Experiments in a variety of circumstances. Indeed, you can arguably use experiments to test any changes. However, I personally would avoid using experiments for small bid adjustments, as these are low-risk tweaks rather than full-blown changes.

AdWords Experiments are especially useful in terms of testing:

- Bidding strategies

- Ad landing pages

- Ad copy changes

- Display placements

Step-By-Step Guide to Implementation (NEW INTERFACE):

Set Up:

- Click on Drafts & Experiments

- If creating an experiment relating to ad copy, click on the >AD VARIATIONS tab and create your experiment within this tab. Otherwise, create a draft within the CAMPAIGN DRAFTS tab.

- Make 1 change within the campaign (e.g. change bidding strategy from manual to target CPA).

- Click Apply and then select Run an experiment.

- Name experiment, choose start/end date & Experimental split (recommended end date = 3 months from start date; recommented split = 50% or 25% if very large traffic volume).

Monitoring:

- CAMPAIGN EXPERIMENTS Tab or AD VARIATIONS tab (Drafts & Experiments)

- See performance metrics at the top of the page:

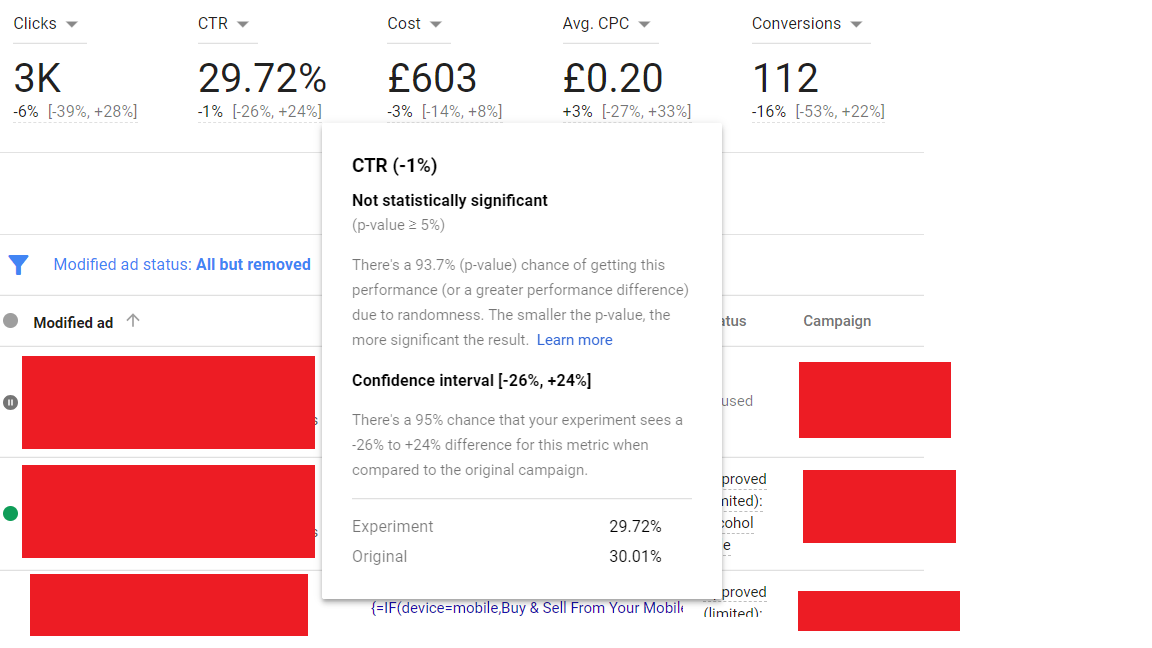

The above experiment is testing an Ad variation (changing headline 2 across all ads to advertise “Free Online Registration”).

Compared to the old interface, where both the experimental and original data are displayed side-by-side, only the experimental data is shown, with the percentage difference displayed beneath the metrics. The square brackets represent the confidence interval but if you hover your mouse over a key metric, more in-depth statistical information is given.

In the above case, although a large volume of clicks and conversions have been obtained during this experiment, none of the percentage differences displayed are statistically significant (p-values >0.05). Therefore, you cannot make any valid conclusions about the effectiveness of the experiment based on the results.

The confidence interval gives the margin of error around the estimate. You may be mistaken in thinking that, although the data differences are NOT statistically significant, the confidence interval gives you a rough estimation of where the true figure lies. This is not the case, however. Indeed, the confidence interval only serves to assess the precision of the estimated percentage difference between the control and experimental data for each variable. Therefore, you only need to consider the confidence interval if your data is significantly different.

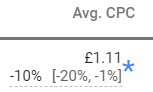

In the above example, this experiment needs to run for a longer time period until all the required metrics display statistically significant differences. The platform displays a little blue astrix (see below) next to statistically significant differences, so you don’t need to worry about figuring this out for yourself.

Final thoughts:

I highly recommend the use of AdWords Experiments when making major changes within your accounts. This feature is beneficial in terms of reducing risks to performance and making decisions that are backed by data rather than purely on logic.

Top Tips:

- Make sure your experiment is named appropriately so you know what is being tested from a glance. Don’t name your experiment “Experiment 1” – this tells you NOTHING!

- You can only have one experiment running per campaign at a time. Believe it or not, this is a good thing as it prevents interference. Remember in science class when you were taught only to have 1 variable per experiment?

- Let your experiments run for at least two months (or 1 month if the campaign drives a lot of traffic). The larger the data sets, the more robust the comparison.

- Set your end date as 3 months from the start date, regardless of when you plan for it to end, as you cannot extend the end date once the experiment has completed.

- Only make decisions when the experimental data is significantly different to the control data set. If the p-value > 5, then any perceived differences are not valid. You need to let the experiment run longer to allow the data sets to increase in size.

- As experiments require a large volume of data, only use them for campaigns that acquire a large volume of traffic. This is especially important if testing bidding strategies.

*Featured images are CC0 Licensed from pixabay.com.