How do you run your ad copy tests for small accounts? We’d be interested in hearing! Since the launch of labels in the AdWords interface it can make running and reviewing simple ad copy tests much, much easier.

This is especially true if you’re running an ad copy test across multiple ad groups at once, and, though this can make it a bit more difficult to evaluate, for a lot of small accounts it’s the only way to quickly progress.

So here it goes: a simple 6-step process to using labels to speed up your ad testing. I’ve deliberately avoided talking about what you should be testing as that’s a whole other blogpost, so sorry if this isn’t much help for newbies. The below assumes you’re already running ad copy tests but haven’t tried using labels to help with it yet.

1. create and upload ads

Pretty self-explanatory really! We always recommend using Google AdWords Editor as it’s the simplest and cheapest (free!) tool to use. Because of the amount of data that’s required for the ad test to reach a statistically significant confidence level you’ll likely be running tests across multiple ad groups, and AdWords Editor makes this massively easier.

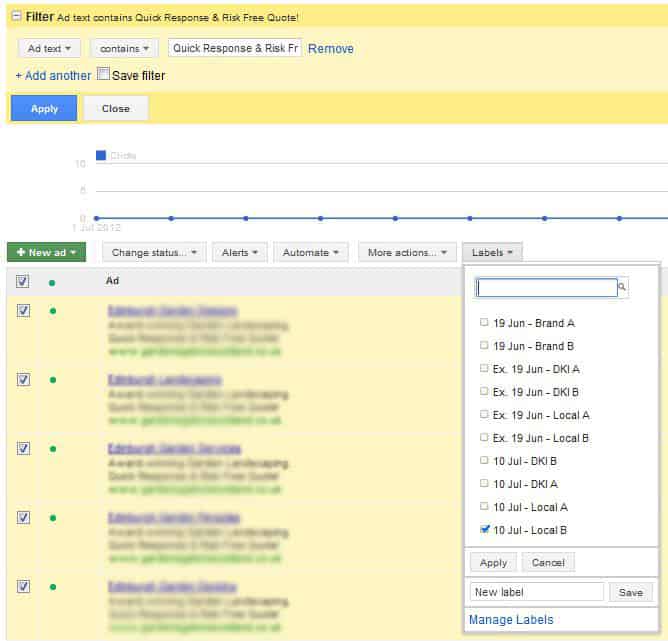

2. applying labels

Once all the ads are up, filter the ads by the distinguishing variations in the ad text, and then apply a label to them that includes the date, the test name and the variation number or identifying quality. Repeat this for the other variations in the test.

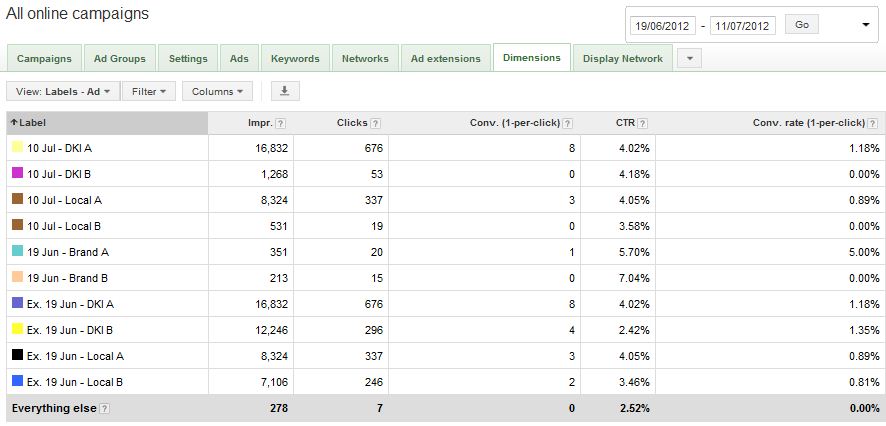

3. save dimensions column layout

Go to the Dimensions tab and save a columns layout that will show Labels, Impressions, Clicks, Conversions, CTR and Conversion Rate. Then once your ads have begun collecting data, return to the Dimensions tab, select the saved column layout and you can quickly review (by selecting View/ Labels/ Labels – Ads from the drop-down at the left) how your ads are getting on. Because the date’s there, you’ll always know what date range to look back to when reviewing each test.

4. measuring the significance

Once you suspect you may have a good enough data sample size, download the Dimensions report. The next step is measuring the statistical significance of the data sample, and we recommend using this tool by Chad Summerhill. There’s an instructional video to explain more on how to use it. As I said above, grouping ad group data together can sometimes jeopardise the validity of the data especially when ad groups have only small levels of traffic, as it’s ignoring the fact that we’re actually running a different test in each ad group. If this is a concern for you then you may want to try Chad’s other tool (he’s a generous guy, eh?): the Cross Ad Group Testing Excel Template.

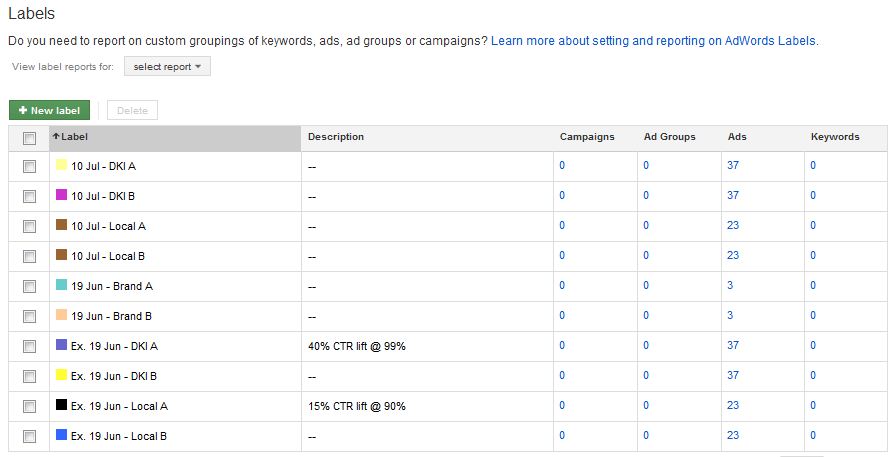

5. end the test

Yay – statistical significance! Pause the losing ad variation, and then in the Labels area of the interface (not the dimensions tab, the link is at the bottom of the left hand panel of the interface) leave a description on the winning ad variation of the uplift and the confidence level reached. We’d also recommend at this point changing the name of the labels to signify it has now ended (but leave the date, name and variations info).

6. the next ad test

When you create the next test in all likelihood you’ll want to continue testing the champion from the last test against the new contender. In this case, label the ads with the new test start date, name and variatons as before, but keep the old label on the champion ad variation as well. A really good ad will display multiple coloured labels as badges of honour from all the ad copy tests they’ve succeeded in.

summary

Using labels to manage your ad copy tests means you don’t have to compile all your ad group data with a pivot table (as the Dimensions tab does this for you), you don’t have to keep a record of which ad tests are running (as the labels themselves do this), when they started running (the label names do this) and you can also keep the results of the tests in the actual interface rather than an external spreadsheet (with the Label description column).

other uses for labels

Ad copy is just one of the many things labels can help with. How about picking out your top performing keywords across your account, and then setting an automated rule to ensure these keywords maintain a certain ad position or to monitor any drop in their traffic levels? Or how about using labels to mark a recent upload of new keywords so you can monitor their performance separate from the original account? They can help with reporting too, particularly for agencies who manage multiple accounts and need to separate ones based on the reporting they require.

It’s just a shame the latest update of AdWords Editor still doesn’t support them!

![]() photo credit: foxypar4

photo credit: foxypar4